Data Center Infrastructure for Networking Engineers

Overall rating: 4.68 Instructor: 4.84 Materials: 4.74 more …

Every major vendor is promoting server virtualization and cloud computing as the magic technologies that will help you cut data center costs while increasing productivity and service availability. It’s true – data center redesign can save you a lot of money ... assuming you can make sense of the incomprehensible soup of technologies and acronyms and choose wisely.

Goals

This webinar will help you reach two important goals: understand the data center acronym soup and build a conceptual framework of the data center technologies and solutions.

The acronym soup

Can you describe the benefits of server virtualization in two sentences? How about the reasons to deploy Storage Area Network (SAN)? When building a SAN solution, should you use FC, FCoE or iSCSI? Or is it better to use NFS or CIFS? What is the function of an HBA? Why would you need DCB? Can TRILL benefit your network? Should you wait for switches supporting L2MP? Or is OTV a better alternative? Should you buy MPLS/VPN or VPLS services when building a disaster recovery site?

After attending this webinar, you’ll be able to answer all of the above questions.

We need to speak the same language

A data center redesign is most successful when the application teams, server administrators and networking engineers work hand-in-hand from the beginning of the project; late involvement of the networking team (usually when the performance of the new architecture doesn’t meet the expectations) oft results in finger pointing and blame shifting.

To become involved in the early stages of new data center projects, you have to understand the challenges faced by server and storage administrators, be fluent in the technologies they commonly use and prove that you can help them build better solutions. This webinar will give you a clear overview of data center challenges and a conceptual framework you need to quickly absorb the details of new technologies and solutions as they become available.

Target audience

This webinar is ideal for IT managers and networking engineers that have to understand the big picture: how the Data Center buzzwords and technologies they hear about relate to reduced costs and increased availability of their Data Center services. It will also help engineers with networking or programming background understand the architectural options and solutions used in modern Data Centers.

Contents

Business Trends

You want to truly understand a complex problem? Put aside the technology for a moment and follow the money. Data centers are no different. We’re all faced with pressures to reduce the capital and operational expenses while increasing application availability. The only way to reach this goal is through aggressive utilization of modern virtualization technologies that help you increase equipment utilization and reduce electricity and cooling costs.

Load Balancing and Scale-Out Architectures

This section covers most common load balancing mechanisms:

Application-level load balancing: worker processes, event-based web servers, FastCGI offload, caching servers and reverse proxies, database sharding and replicas.

Network-based load balancing: local and global anycasting, local and global DNS load balancing, and load balancers operating in transparent mode, source-NAT mode and direct server return.

Application delivery controller features including session stickiness, TCP parameter adjustment, permanent HTTP sessions, SSL offload, and inter-protocol gateways (SPDY-to-HTTP).

Server Virtualization

Server virtualization (the ability to run multiply logical machines on the same physical hardware) is the core technology of the modern data center design. It significantly increases equipment utilization, thus reducing power consumption, and drastically reduces the average server deployment time.

This section describes:

- A brief history of server virtualization

- Virtualizing server hardware - from IBM mainframes to virtual machines

- Hypervisor types

- VM access to external resources (networking and storage)

- Benefits of server virtualization including high-availability solutions and automatic power reduction measures

- Operating system-level virtualization: jails, namespaces and containers

- Blade servers and other approaches to hyper-converged compute resources

LAN Virtualization

Logical servers running within the same physical server might have different security requirements; sometimes you even have to isolate them from each other. Virtual Local Area Networks (VLANs) and private VLANs (PVLAN) were the traditional infrastructure providing the inter-server isolation.

However, be aware that every server virtualization platform contains a virtual switch that extends the bridging domain of your network. The introduction of virtual switches has to be carefully planned ... or you might end up with a fantastic network meltdown due to a bridging loop or a security breach due to extra connectivity invisible to the networking gear.

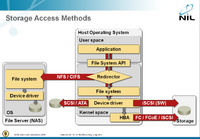

Storage Area Networks

You can only benefit from advanced server virtualization technologies if the storage used in your data center supports access to the same data from multiple physical servers. A storage area network (SAN) is thus a mandatory component of modern data center designs.

Understanding the SAN evolution definitely helps you to understand the SAN challenges we’re facing today. This section describes how SCSI transformed into Fiber Channel (FC) and iSCSI and explains modern alternatives (iSCSI, FCoE or NFS) and means of extending storage networks over large distances.

Bridging, Routing or Switching

Some application developers and server administrators would like to see the Data Center designed as a huge bridged network, as this “design” makes their life extremely easy: every host can communicate with every other host even when using weird technologies that should never have been deployed (example: Microsoft’s NLB in Unicast Mode). The networking engineer trying to introduce scalability and security in such an environment is clearly doomed to fail.

This section describes the true needs for data center bridging, emerging bridging technologies (DCB, L2MP, TRILL), their potential usability and pitfalls, as well as the proper position of routing in the data center design.

Disaster Recovery and High Availability

Very high application availability is a must for any business that relies heavily on IT infrastructure for its day-to-day operations. The required availability is usually achieved with help of redundant data centers, operating in active/standby or even active/active (load balancing) configurations.

This section describes the basics of high availability data center design and demonstrates how you can use numerous Service Provider services (including dark fiber, MPLS/VPN and VPLS services) to build your redundant infrastructure.

Exclusions

The webinar does not address device configurations or other low-level technical details. We can cover these details in a follow-up discussion during the on-site delivery or you could attend in-depth technology-specific webinars as they become available.

Happy Campers

Feedback from the attendees

- Fantastic use of 4.5 hours and the cost was very reasonable! Would definitely do another session like this sometime!

- Patrick Swackhammer

- A lot of the raw material on the technology is available via blog entries, but it was helpful to have the material tied together & receive guidance.

- Dave Coulthart

- Although a bit long (but you knew that already!) this webinar was DEFINITLY packed with information. I found many parts useful, but as you explained, not everythinhg in there was for everybody. You gave a very good demonstration of how the data center works from the network perspective and I especially liked the overiew/refreshers of SCSI, FCoE, FC, and the other "data center " centric technologies. I remember working with all this stuff in my past but never really had the view of where they came from originally and who begat who. Depending on timing, I will most likely see you in the DMVPN as that is one project I am planning in the near future. Thank you!

- Bill McFarlin

- I thought this was excellent, the kind of information based on real world experience that you don't get from the usual training courses

- ewells

- Excellent session. Comprehensive; well planned; good pace. I would certainly attend another providing the topic is relevant to my needs.

- Steven Milsom

- The Webinar was very informative and you clearly know your material. I am primarily a network engineer and as such I do not work directly in a Data Center environment but the delivery of the material was great and there was no problem in understanding the material presented.

- Kissan Brown

- Very cost effective review of technologies/methodologies.

- Chris Clark

- Excellent session with good content. Besides those new to the data center or virtualization, I'd also recommend this session to anyone who's already familiar with virtualization but needs to fill in gaps in their knowledge. The sections covering data center interconnection and LAN virtualization were very informative.

- Chris Church

- Four and half hours packed with information, bits, pieces and terms explained, how it works, how it ties together. Great value for people who are entering the world of DCs and clouds, with all caveats and recommendation nicely explained. Also for people (like me from WAN world) who do not work directly with these technologies but need to interact with teams that do = "speak their language". One could feel Ivan's interest (Excitement? Mastery?) in these topics, information were kept up to date with latest announcements, and of course the way topics were explained and questions answered. Thumbs up!

- Alexandra Stanovska

Blog posts

Tweets

- Fantastic 4.5hr webinar from @ioshints today on Data Center info for Network Engineers

- @swackhap

- Just finished watching @ioshints DC3.0 Webinar. I strongly recommend it for network engineers looking at Data Center architecture.

- @fsmontenegro

- Normally, I don't do advertisements, but @ioshints DataCenter 3.0 webinar was awesome. VMWare, iSCSI,...Next is on 18 Nov http://is.gd/gAHZL

- @verbosemode

- Finished attending @ioshints "DC 3.0 For network engineers" webinar. Very highly recommended, awesome work. 1st impressions blog later.

- @yandyr

Ivan Pepelnjak, CCIE#1354 Emeritus, is an independent network architect, book author, blogger and regular speaker at industry events like Interop, RIPE and regional NOG meetings. He has been designing and implementing large-scale service provider and enterprise networks since 1990, and is currently using his expertise to help multinational enterprises and large cloud- and service providers design next-generation data center and cloud infrastructure using Software-Defined Networking (SDN) and Network Function Virtualization (NFV) approaches and technologies.

Ivan Pepelnjak, CCIE#1354 Emeritus, is an independent network architect, book author, blogger and regular speaker at industry events like Interop, RIPE and regional NOG meetings. He has been designing and implementing large-scale service provider and enterprise networks since 1990, and is currently using his expertise to help multinational enterprises and large cloud- and service providers design next-generation data center and cloud infrastructure using Software-Defined Networking (SDN) and Network Function Virtualization (NFV) approaches and technologies.